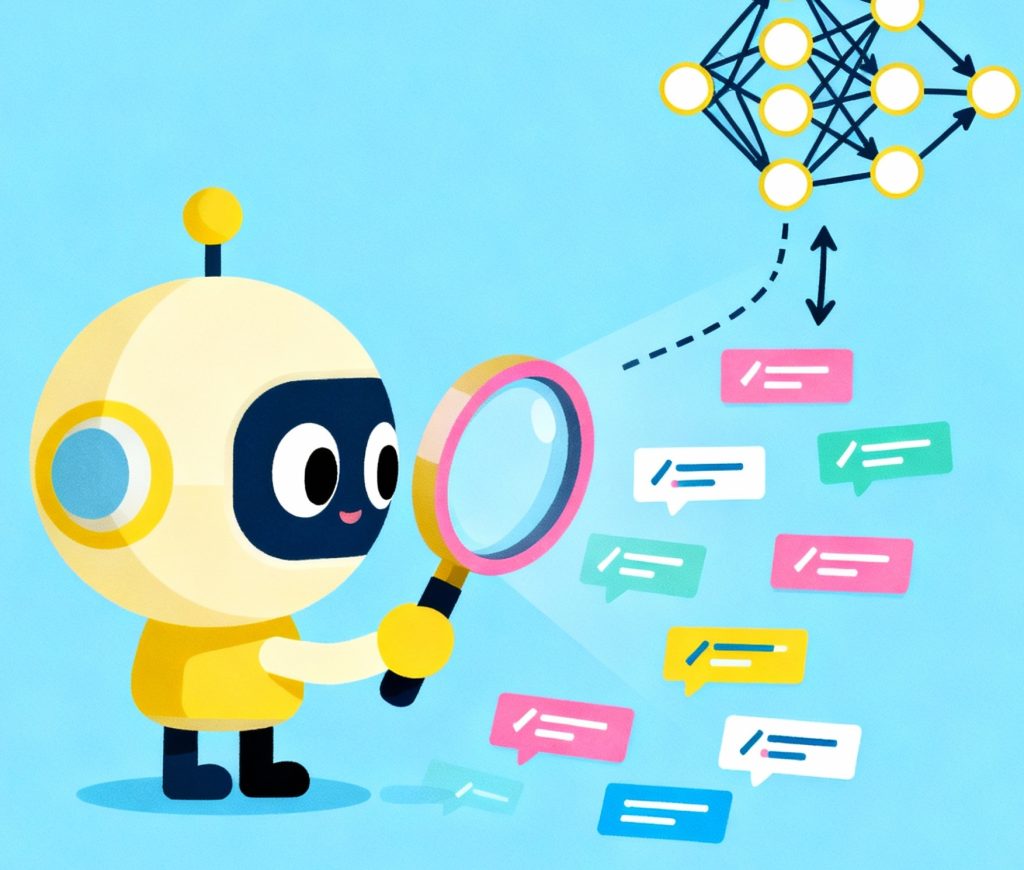

Have you ever wondered how do AI detectors work when they claim to distinguish between human- and machine-written content?

With large-language models like GPT-5 and Gemini 2 producing human-like text, educators, developers, marketers, and policymakers are asking: How can we verify authenticity in an AI-driven world?

This comprehensive guide explores how AI detectors identify AI-generated content, what technologies they use, how reliable they are, and what the future holds.

What Are AI Detectors and Why They Matter

AI detectors are systems designed to analyse digital content—text, images, code, or even video—to determine whether it was created by a human or an artificial-intelligence model.

They serve vital roles in 2026:

Educators use them to uphold academic honesty.

Journalists verify authenticity in news and research.

Companies and SEO professionals ensure brand credibility and compliance.

Developers and IT managers integrate them into content pipelines to flag AI-generated submissions or customer input.

As AI adoption grows, understanding how AI detectors work is essential for building trust in digital communication.

The Science Behind AI Detection

To understand how AI detectors work, we need to look at four core techniques that power modern detection systems.

1. Statistical Analysis — Perplexity and Burstiness

Most AI detectors begin with statistical text analysis, measuring how “predictable” the writing is.

Perplexity: This measures how surprised a language model is by the next word in a sentence.

Lower perplexity → text looks more predictable → likely AI-generated.

Higher perplexity → text has human-like spontaneity → likely written by a person.

Burstiness: Refers to the variation in sentence lengths and structures.

Humans naturally mix short, punchy lines with long, complex sentences.

AI models often produce uniform, balanced sentence patterns.

For example, a paragraph with consistent sentence length and tone throughout may score high on AI-likelihood metrics.

2. Stylometric and Linguistic Features

Another key technique is stylometry, the statistical analysis of writing style.

AI detectors measure factors such as:

Vocabulary richness (variety of unique words).

Average sentence length.

Frequency of passive voice.

Punctuation and emoji usage.

Repetition of rare or high-frequency words.

AI models tend to favour grammatical perfection and coherence, but lack small inconsistencies, slang, and emotional nuance. These patterns help detectors identify probable AI-generated text.

3. Machine Learning and Classification Models

Modern AI detectors are themselves machine-learning classifiers trained on vast datasets of both human- and AI-written content.

They learn statistical differences between the two categories and assign a probability score (e.g., “85 % AI-generated”).

Common model types include:

Logistic Regression / Random Forest: for lightweight text classification.

Transformer-based Detectors: fine-tuned on open-source LLMs (like RoBERTa or BERT).

Adversarial Models: trained to detect even unseen or paraphrased AI-outputs.

This approach enables cross-model detection—spotting content from AI systems they’ve never explicitly seen before.

4. Watermarking and Content Provenance

AI researchers have proposed adding cryptographic watermarks to generated text or embedding “invisible signatures” in token patterns.

For example:

OpenAI and Anthropic have experimented with watermarking in LLMs.

Google’s “SynthID” applies invisible digital signatures to AI-generated images.

If adopted widely, this would make AI-content verification more reliable, though it requires cooperation across platforms.

How AI Detectors Work for Different Content Types

Text Detection

AI text detection tools like GPTZero, Copyleaks, and Sapling AI analyze sentence entropy, perplexity scores, and writing style to assess authorship.

Image Detection

AI image detectors use deep-learning networks to identify pixel patterns, inconsistencies in lighting, and metadata clues.

Video and Audio Detection

Deepfake detectors track micro-expressions, eye movements, voice modulation, and compression artifacts that human creators would rarely produce consistently.

Where AI Detectors Succeed

✅ Accuracy on Large Samples: Detectors perform best when analyzing long-form text (over 500 words) since patterns are easier to detect.

✅ Speed: Many AI detectors can process hundreds of documents per second for enterprise workflows.

✅ Scalability: Ideal for academic institutions, publishing houses, and corporate compliance departments.

Where They Struggle — Limitations of AI Detectors

Despite advances, AI detectors face significant challenges.

1. False Positives

Edited or polished human writing can appear too uniform and get incorrectly flagged as AI-generated. This creates ethical issues in education and journalism.

2. False Negatives

Sophisticated AI systems can mimic human irregularities, or paraphrase themselves to bypass detection.

3. Model Lag

Detectors are trained on older LLM outputs. When new models emerge, detection accuracy drops until retraining occurs.

4. Language and Context Bias

Many AI detectors are trained on English text and struggle with other languages or domain-specific jargon.

5. Ethical and Legal Concerns

Without transparency and appeal mechanisms, innocent users may be unfairly accused of using AI.

AI Detector Accuracy in 2026

Current AI content checkers claim accuracy between 70 % and 95 %.

However, accuracy depends on several factors:

| Factor | Description | Impact |

|---|---|---|

| Text Length | Short texts (< 100 words) yield unreliable results | ↓ Accuracy |

| Model Familiarity | Detectors perform better on known LLMs | ↑ Accuracy |

| Paraphrasing Level | Heavy human editing confuses detectors | ↓ Accuracy |

| Domain Knowledge | Technical or creative content shows unique styles | ↑ Reliability |

In practice, most platforms use AI detectors as a first filter, not a final judge.

Why Understanding AI Detectors Matters for Professionals

Software Developers & Data Scientists

Knowing how AI detectors work helps when integrating them into apps, grading systems, or content moderation pipelines.

Marketing Directors & Content Creators

Use AI detectors to ensure your team’s content is human-edited and compliant with SEO policies. Too much AI usage without editing can hurt credibility.

Educators & Researchers

Adopt detectors as support tools—never as final arbiters. Combine them with manual review and clear AI-use guidelines.

Executives & Policy Makers

Understanding AI content detection is crucial for regulating AI disclosure, ethical AI usage, and reputation management in 2026.

How to Use AI Detectors Effectively

Select a trusted detector.

Look for transparency about training data, false-positive rates, and model updates.Use multiple tools.

Comparing outputs from two detectors reduces bias and improves confidence.Interpret results cautiously.

Treat detection scores as probabilities, not verdicts.Combine with human judgment.

A content editor or domain expert can spot contextual clues AI might miss.Keep models updated.

Outdated detectors may fail against next-gen language models.Add disclosure policies.

When AI assists in writing, be transparent—build trust through honesty.

Best AI Content Detection Tools in 2026

| Tool | Key Feature | Ideal For |

|---|---|---|

| GPTZero | Perplexity & burstiness scoring | Academia and education |

| Copyleaks AI Detector | Multi-language support | Enterprises & publishers |

| Sapling AI Content Checker | Chrome extension integration | Marketing teams |

| Writer AI Content Detector | Business-grade API access | Developers & agencies |

| OpenAI Classifier (Next Gen) | Deep transformer classification | Research & policy analysis |

Note: All tools should be used with ethical and legal considerations.

Future of AI Detection

By 2026, AI detection is shifting from basic text scoring to multi-modal analysis that integrates context, intent, and metadata.

Emerging trends include:

Universal AI Watermark Standards: Cross-platform collaboration to embed invisible tokens in AI outputs.

Model-Agnostic Detectors: Designed to spot new LLMs without retraining.

Ethical Governance: Governments and organizations may mandate AI usage disclosures.

Human + AI Verification Loops: Combining detector results with editorial and peer review processes.

As the line between AI and human content blurs, the goal is not just detection, but transparency and trust.

Quick Checklist for AI Detector Users

✅ Use AI detectors for screening, not judgement.

✅ Cross-verify with multiple tools.

✅ Stay updated on AI model advances.

✅ Educate teams about false positives and bias.

✅ Integrate detectors into ethical AI frameworks.

FAQ Section

Q1. Can AI detectors 100 % confirm if text is AI-generated?

No. They provide probabilities based on patterns. Editing, translation, or hybrid authorship can confuse even the best models.

Q2. How accurate are AI detectors in 2026?

Accuracy ranges from 70 % to 95 %, depending on length, domain, and model updates. No tool is completely fool-proof.

Q3. Can I bypass AI detectors by paraphrasing?

Heavy paraphrasing or adding human context can reduce detection rates, but ethical best practice is to disclose AI usage openly.

Q4. Are AI detectors useful for non-text content?

Yes. New tools can analyse AI-generated images, videos, and audio using deepfake detection networks.

Q5. Should organizations mandate AI disclosure instead of relying on detectors?

Ideally both. Disclosure builds trust, while detectors verify compliance and catch unintentional breaches.

Conclusion — Building Trust in an AI-Generated World

So, how do AI detectors work? They blend statistical metrics, linguistic patterns, and machine learning to estimate authorship. They’re powerful, but not infallible.

For developers, educators, and executives, the takeaway is clear: use AI detectors as part of a larger ethics and verification strategy.

As AI generation and detection continue their arms race in 2026, transparency and responsible adoption will be the real detectors of trust.

Call to Action:

If you create or manage digital content, start evaluating AI detectors today. Compare accuracy scores, update your ethics policy, and train your team to use these tools wisely. The future of trust in AI-driven communication depends on it.